In October 2014, Databricks participated in the Sort Benchmark and set a new world record for sorting 100 terabytes (TB) of data, or 1 trillion 100-byte records. The team used Apache Spark on 207 EC2 virtual machines and sorted 100 TB of data in 23 minutes.

In comparison, the previous world record set by Hadoop MapReduce used 2100 machines in a private data center and took 72 minutes. This entry tied with a UCSD research team building high performance systems and we jointly set a new world record.

Additionally, while no official petabyte (PB) sort competition exists, we pushed Apache Spark (Spark) further to also sort 1 PB of data (10 trillion records) on 190 machines in under 4 hours. This PB time beats previously reported results based on Hadoop MapReduce (16 hours on 3800 machines). To the best of our knowledge, this is the first time a combination of open source software (Spark) and public cloud infrastructure (EC2) was used to set a new record on 100 TB sort, and the first petabyte-scale sort ever done in a public cloud.

Named after Jim Gray, the benchmark workload is resource intensive by any measure: sorting 100 TB of data following the strict rules generates 500 TB of disk I/O and 200 TB of network I/O. Organizations from around the world often build dedicated sort machines (specialized software and sometimes specialized hardware) to compete in this benchmark.

Hadoop MR Record |

Spark Record |

Spark 1 PB |

|

| Data Size | 102.5 TB | 100 TB | 1000 TB |

| Elapsed Time | 72 mins | 23 mins | 234 mins |

| # Nodes | 2100 | 206 | 190 |

| # Cores | 50400 physical | 6592 virtualized | 6080 virtualized |

| Cluster disk throughput | 3150 GB/s (est.) |

618 GB/s | 570 GB/s |

| Sort Benchmark Daytona Rules | Yes | Yes | No |

| Network | dedicated data center, 10Gbps | virtualized (EC2) 10Gbps network | virtualized (EC2) 10Gbps network |

| Sort rate | 1.42 TB/min | 4.27 TB/min | 4.27 TB/min |

| Sort rate/node | 0.67 GB/min | 20.7 GB/min | 22.5 GB/min |

What is Spark?

Widely deemed the successor to Hadoop MapReduce, Apache Spark is a fast and general engine for large-scale data processing. It provides programming APIs in Java, Python, Scala, and SQL, and can be used to efficiently execute diverse workloads, including common ETL, data streaming, machine learning, graph computation, and SQL.

Spark is one of the most actively developed open source projects. It has over 465 contributors in 2014, making it the most active project in the Apache Software Foundation and among Big Data open source projects.

Sorting

The Sort Benchmark (Benchmark) was initially proposed and sponsored by Jim Gray to measure the state-of-the-art development of computer systems. After Jim Gray passed away in 2007, the Benchmark is now run by a consortium of past winners. The Benchmark consists of multiple categories, each with a different focus. Daytona Gray (named after Dr. Gray) is the most challenging category, as it requires participating systems to sort 100 terabytes (TB) of data in the fastest time possible, regardless of computing resources used.

At the core of sorting is the shuffle operation, which moves data across all machines. Shuffle underpins almost all distributed data processing workloads. For example, a SQL query joining two disparate data sources uses shuffle to move tuples that should be joined together onto the same machine, and collaborative filtering algorithms such as ALS rely on shuffle to send user/product ratings and weights across the network.

Most data pipelines start with a large amount of raw data, but as the pipeline progresses, the amount of data is reduced due to filtering out irrelevant data or more compact representation of intermediate data. A SQL query on 100 TB of raw input data most likely only shuffles a tiny fraction of the 100 TB across the network. This pattern is also reflected in the naming of the popular data processing framework MapReduce.

Sorting, however, is one of the most challenging because there is no reduction of data along the pipeline. Sorting 100 TB of input data requires shuffling 100 TB of data across the network. As a matter of fact, the Daytona Gray competition requires us to replicate both input and output data for fault-tolerance, and thus sorting 100 TB of data effectively generates 500 TB of disk I/O and 200 TB of network I/O.

For the above reasons, when we were looking for metrics to measure and improve Spark, thus sorting, one of the most demanding workloads, became a natural choice to focus on.

What made it possible?

A lot of development has gone into improving Spark for very large scale workloads. In particular, there are three major pieces of work that are highly relevant to this benchmark.

First and foremost, in Spark 1.1 we introduced a new shuffle implementation called sort-based shuffle (SPARK-2045). The previous Spark shuffle implementation was hash-based that required maintaining P (the number of reduce partitions) concurrent buffers in memory. In sort-based shuffle, at any given point only a single buffer is required. This has led to substantial memory overhead reduction during shuffle and can support workloads with hundreds of thousands of tasks in a single stage (our PB sort used 250,000 tasks).

Second, we revamped the network module in Spark based on Netty’s Epoll native socket transport via JNI (SPARK-2468). The new module also maintains its own pool of memory, thus bypassing JVM’s memory allocator, reducing the impact of garbage collection.

Last but not least, we created a new external shuffle service (SPARK-3796) that is decoupled from the Spark executor itself. This new service builds on the aforementioned network module and ensures that Spark can still serve shuffle files even when the executors are in GC pauses.

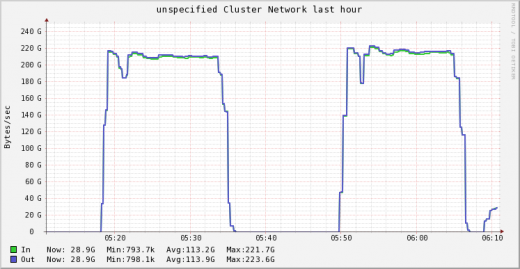

With these three changes, our Spark cluster was able to sustain 3GB/s/node I/O activity during the map phase, and 1.1 GB/s/node network activity during the reduce phase, saturating the 10Gbps link available on these machines.

The nitty-gritty

TimSort: In Spark 1.1, we switched our default sorting algorithm from quicksort to TimSort, a derivation of merge sort and insertion sort. It performs better than quicksort in most real-world datasets, especially for datasets that are partially ordered. We use TimSort in both the map and reduce phases.

Exploiting cache locality: In the sort benchmark, each record is 100 bytes, where the sort key is the first 10 bytes. As we were profiling our sort program, we noticed the cache miss rate was high, because each comparison required an object pointer lookup that was random. We redesigned our record in-memory layout to represent each record as one 16-byte record (two longs in the JVM), where the first 10 bytes represent the sort key, and the last 4 bytes represent the position of the record (in reality it is slightly more complicated than this due to endianness and signedness). This way, each comparison only required a cache lookup that was mostly sequential, rather than a random memory lookup. Originally proposed by Chris Nyberg et al. in AlphaSort, this is a common technique used in high-performance systems.

Spark’s nice programming abstraction and architecture allow us to implement these improvements in the user space (without modifying Spark) in a few lines of code. Combining TimSort with our new layout to exploit cache locality, the CPU time for sorting was reduced by a factor of 5.

Fault-tolerance at scale: At scale a lot of things can break. In the course of this experiment, we have seen nodes going away due to network connectivity issues, the Linux kernel spinning in a loop, or nodes pausing due to memory defrag. Fortunately, Spark is fault-tolerant and recovered from these failures.

Power of the cloud: As mentioned previously, we leveraged 206 i2.8xlarge instances to run this I/O intensive experiment. These instances deliver high I/O throughput via SSDs. We put these instances in a placement group in a VPC to enable enhanced networking via single root I/O virtualization (SR-IOV). Enabling enhanced networking results in higher performance (10Gbps), lower latency, and lower jitter. We would like to thank everyone involved at AWS for their help making this happen including: the AWS EC2 services team, AWS EC2 Business Development team, AWS product marketing and AWS solutions architecture team. Without them this experiment would not have been possible.