There was a time in computing when performance increases could be had by designing a more complex processor or turning up the clock speed. Those days are largely behind us and the most common solution at present is to add more cores to a symmetric multiprocessing (SMP) system, but this has practical scaling limits and there are downsides to a one-size-fits-all processor architecture.

There was a time in computing when performance increases could be had by designing a more complex processor or turning up the clock speed. Those days are largely behind us and the most common solution at present is to add more cores to a symmetric multiprocessing (SMP) system, but this has practical scaling limits and there are downsides to a one-size-fits-all processor architecture.

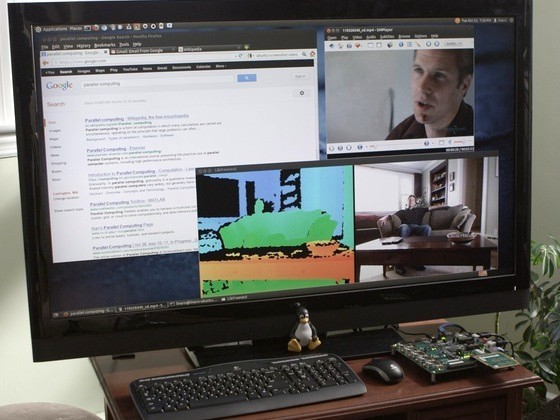

The world’s most powerful computers make use of parallel computing, using configurations such as Linux-based Beowulf clusters to distribute workloads across many thousands of processor cores. Closer to the other end of the scale, with desktop and mobile devices, compute intensive graphics processing is handled by high performance GPUs that are finely tuned for the task at hand.

Parallel computing and heterogeneous systems — with mixed types of computational units — are able to break through SMP scaling limits and achieve increased performance with reduced power consumption. However, they also come with their own challenges, and making parallel computing easy to use has been described as “a problem as hard as any that computer science has faced.”

With these challenges in mind the Parallella project has set out to help close the knowledge gap by developing an affordable, high performance and truly open parallel computing platform.